AI tech is galloping ahead like a runaway horse, with innovation racing rampant and regulations struggling to catch up.

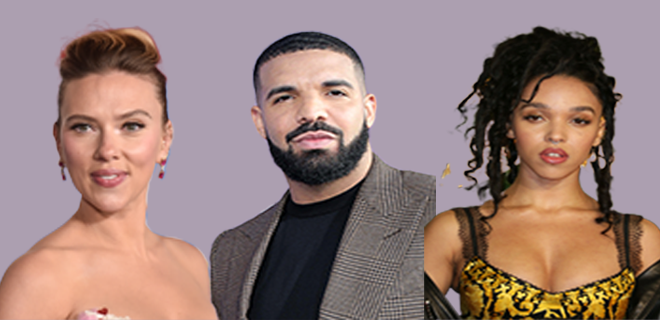

The latest spicy AI scandals involving Scarlett Johansson, Drake, and FKA Twigs reveal the AI frontier is still the wild wild west. What does all of this AI chaos mean for digital media, and is it time to call the sheriff to get things in check?

Scarlett Johansson’s AI Doppelgänger: Privacy in Peril

Can you envision waking up and finding your voice hijacked by AI? Scarlett Johansson lived that nightmare when OpenAI’s tech mimicked her voice from the movie “Her” without consent. Is AI playing ventriloquist with our lives? If it can happen to Johansson, it can happen to anyone. This incident slaps us with a harsh reality check on privacy and consent in the digital age.

But, the implications go beyond celebrity woes. We’re entering an era where your voice, likeness, and digital self can be cloned and used without your permission. It’s not just spooky — it’s a serious breach of personal boundaries, rendering our sense of privacy untenable.

Using AI to clone celebrity voices without consent raises significant privacy and ethical concerns. While OpenAI contends the voice is not a Johansson clone, the backlash prompted them to pull the plug, rethinking their approach and pausing the use of Sky — an AI voice assistant that sounds eerily similar to Johansson’s voice in “Her.”

Lest we forget, the film is a cautionary tale about the dangers of becoming overly reliant on AI at the expense of genuine human bonds and emotional intimacy.

Drake’s AI Resurrection: Tupac’s Voice from Beyond

Johansson’s case isn’t the only one to call attention to the ethical challenges of AI voice cloning. Take Drake, for example. The rapper used some technological sorcery to drop a track featuring an AI-generated Tupac. He featured Tupac’s vocal likeness on his “Taylor Made” track amid his recent battle with Kendrick Lamar. The decision ignited a legal firestorm with Tupac’s estate, forcing Drake to remove the song. It also caught the attention of Congress.

This scenario suggests a future where AI resurrects artists to produce new works, raising profound questions about legacy, consent, and artistic integrity. On a recent episode of my podcast, Tech & Soul, with advertising futurist Tameka Kee, I discussed the potential for AI to exploit deceased artists’ archives, turning creative legacies into AI-driven revenue streams without due respect to the original creators. Interestingly, I used Tupac as an example given the prolific releases of his posthumous albums.

Imagine the estates of late artists, using AI to turn legacy into loot, while churning out new hits from their archives. It’s a vision of remix culture gone mad, risking the sanctity of artistic creation. What’s next — AI-generated Nirvana albums? It’s a precarious slope, folks.

FKA Twigs: Sounding the Alarm in the Senate

The societal ramifications of AI were further underscored when FKA Twigs took her concerns to the Senate. Testifying about the dangers of deepfakes and AI, the singer highlighted the urgent need for regulatory oversight. Her impassioned plea, spotlighted concerns about AI creating fake yet convincing content, blurring the lines of reality. It’s like living in a perpetual state of “The Truman Show,” where nothing is quite what it seems.

Twigs announced she developed a deepfake version of herself to handle social media interactions, emphasizing that this was done under her control and consent.

This ties directly into the alarming rise of deepfake political ads and robocalls, which have the potential to mislead voters and disrupt democratic processes. States are already moving to label these deceptive practices, recognizing the urgent need for regulatory oversight.

Without proper oversight, AI can be weaponized to manipulate public opinion and undermine trust in the political system. Twigs’ testimony goes beyond protecting celebrities — it’s a call to action about safeguarding the integrity of intellectual property and information. Without proper regulations, AI can create a dystopian future where trust is a rare commodity, and anyone’s likeness can be hijacked for nefarious purposes.

Regulatory Tightrope: Innovation vs. Oversight

So, what does all this mean for digital media companies? The ethical implications of AI are vast, but the benefits for publishers are significant, from Forbes’ generative AI search engine Adelaide to traditional AI automating repetitive tasks. Publishers face a dilemma: embrace AI for its innovative potential or push back to maintain content integrity. The stakes are high, and the path forward isn’t clear-cut.

Do we corral AI with regulations or let innovation roam free? One camp argues that strict rules could stifle creativity, turning our digital gold rush into a bureaucratic slog. But without some form of control, do we risk descending into chaos?

In my chat with Dan Rua from Admiral about the generative AI revenue opportunity for publishers, he pointed out that regulation is inevitable but must be done right. We’re at the dawn of AI, and while lawsuits and licensing debates are on the horizon, the focus should be on responsible use rather than knee-jerk bans.

Some industry insiders advocate for flexible standards over rigid laws. Recent moves by tech giants like Meta, YouTube, and IBM to self-regulate hint at a middle ground. They’re introducing policies to disclose AI use in ads and videos, striving to build trust without crushing innovation.

But self-regulation isn’t a panacea. It relies on companies acting in good faith, which isn’t always guaranteed. That’s why a hybrid approach, combining flexible standards with targeted regulations, might be the best path forward. This approach allows innovation to flourish while protecting consumers and creators from the darker sides of AI.

The Road Ahead for AI in Media

As we navigate the complexities of AI, it’s clear that the stakes are high. The stories of Scarlett Johansson, Drake, and FKA Twigs are a wake-up call.

These high-profile cases reflect the ethical dilemmas that digital media companies must grapple with as AI continues to evolve. The push for AI innovation often collides with the need to maintain content integrity and protect intellectual property. We’ve seen this dynamic play out as media companies scramble to strike deals with AI firms like OpenAI, sometimes at the cost of their content’s value and authenticity. By handing over content for AI training, publishers are potentially giving away the farm.

Publishers and content creators must advocate for strong standards and ethical practices that prioritize transparency and accountability. By pushing for comprehensive regulations, we can navigate the complexities of AI with a commitment to integrity and respect for the creative process. The future of digital media depends on our ability to harness AI responsibly, ensuring it serves as a tool for innovation rather than a means of exploitation.